Have you ever encountered an error message stating, "What does this resource is being rate limited"? If so, you're not alone. This technical issue often arises when users attempt to access a particular resource, such as an API, a web service, or even a server, only to find their requests restricted or temporarily blocked. Rate limiting is a mechanism used by service providers to control traffic, prevent abuse, and maintain system stability. While it may seem frustrating, understanding why this happens and how to address it can save you from unnecessary stress and confusion.

Rate limiting is a common practice in the digital world, especially in environments where resources are shared among many users. Whether you're a developer, a website owner, or just someone trying to access an online service, you may encounter this limitation. The phrase "what does this resource is being rate limited" typically appears when you exceed the allowed number of requests within a specific time frame. This restriction ensures that no single user monopolizes the system, protecting both the service and other users.

In this article, we will delve into the intricacies of rate limiting, exploring its purpose, how it works, and what you can do to resolve it. By the end of this guide, you'll have a clear understanding of the concept, empowering you to navigate these restrictions with confidence. So, whether you're troubleshooting an error or simply curious about the mechanics behind rate limiting, this article has got you covered.

Read also:Paul Walker Dating 15yearold The Truth Behind The Controversy

Table of Contents

- What Does This Resource is Being Rate Limited Mean?

- Why Do Services Implement Rate Limiting?

- How Does Rate Limiting Work Technically?

- What Are the Common Causes of Rate Limiting?

- How Can You Identify Rate Limiting Errors?

- What Are the Best Practices to Avoid Rate Limiting?

- How Can You Resolve Rate Limiting Issues?

- Frequently Asked Questions About Rate Limiting

What Does This Resource is Being Rate Limited Mean?

At its core, the phrase "what does this resource is being rate limited" refers to a restriction placed on the number of requests a user can make to a particular resource within a given time frame. This mechanism is implemented by service providers to ensure fair usage and prevent system overload. When you exceed the allowed number of requests, the system temporarily blocks further access, often displaying an error message or status code to inform you of the limitation.

Rate limiting is not a one-size-fits-all approach; it varies depending on the service and its intended use case. For example, APIs used by developers often have strict rate limits to prevent abuse, while websites may impose softer restrictions to manage traffic spikes. Understanding the specific rules of the resource you're accessing is crucial to avoiding rate limiting issues.

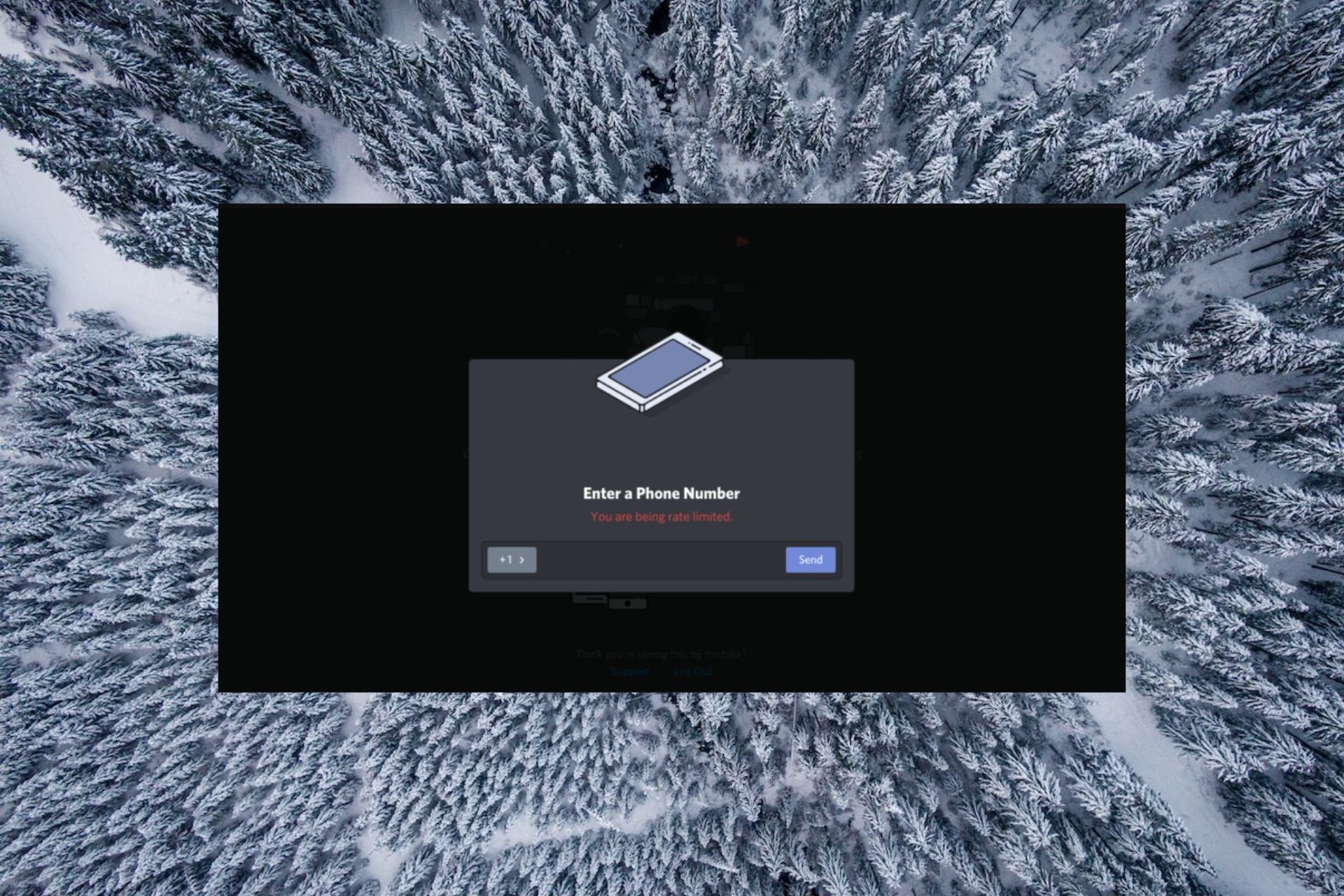

Common indicators of rate limiting include HTTP status codes like 429 (Too Many Requests) or custom error messages from the service provider. These signals are designed to inform users that they need to reduce their request frequency or wait for the restriction to lift. By recognizing these signs early, you can take proactive steps to address the issue and minimize disruptions.

Why Do Services Implement Rate Limiting?

Rate limiting serves several important purposes, each contributing to the overall stability and fairness of a system. First and foremost, it protects the service from being overwhelmed by excessive traffic. Imagine a scenario where a single user or bot sends thousands of requests per second to an API. Without rate limiting, this could lead to server crashes, degraded performance, or even downtime for all users.

Another key reason for implementing rate limiting is to prevent abuse and malicious activities. Hackers and bots often attempt to exploit vulnerabilities in a system by bombarding it with requests. By imposing rate limits, service providers can mitigate these risks and safeguard their infrastructure. This is especially critical for financial services, social media platforms, and other systems handling sensitive data.

Finally, rate limiting ensures equitable access to shared resources. By capping the number of requests per user, providers can allocate resources more fairly, preventing any single user from monopolizing the system. This fosters a more balanced and reliable user experience for everyone involved.

Read also:Roblox Unblocked The Ultimate Guide To Enjoying Uninterrupted Gameplay

How Does Rate Limiting Work Technically?

Rate limiting is typically implemented using algorithms that track and control the number of requests made by a user. One of the most common methods is the "token bucket" algorithm. In this approach, each user is assigned a virtual bucket that holds a fixed number of tokens. Each request consumes one token, and the bucket is refilled at a predefined rate. If the bucket runs out of tokens, further requests are denied until more tokens become available.

Another widely used technique is the "leaky bucket" algorithm, which operates on a similar principle but focuses on smoothing out traffic bursts. Requests are placed into a queue, and the system processes them at a steady rate, regardless of how quickly they arrive. This helps prevent sudden spikes in traffic from overwhelming the system.

Service providers often combine these algorithms with monitoring tools to detect unusual patterns of behavior. For example, if a user suddenly starts making an unusually high number of requests, the system may flag this activity for further investigation. These mechanisms work together to enforce rate limits effectively while maintaining system performance.

What Are the Common Causes of Rate Limiting?

Understanding the root causes of rate limiting can help you avoid triggering these restrictions. One of the most frequent culprits is excessive API usage. Developers who rely on APIs for their applications may inadvertently exceed rate limits by making too many requests in a short period. This often happens when debugging code or running automated scripts without proper throttling mechanisms.

Another common cause is bot activity. Automated bots can generate a high volume of requests, either intentionally or unintentionally, leading to rate limiting. For instance, web crawlers used by search engines may overload a server if not properly configured to respect the site's robots.txt file. Similarly, malicious bots attempting to scrape data or exploit vulnerabilities can trigger rate limits as a defensive measure.

Finally, traffic spikes caused by legitimate users can also result in rate limiting. Events like product launches, flash sales, or viral content can attract a sudden influx of visitors, overwhelming the system. While service providers often anticipate these scenarios and scale their infrastructure accordingly, unexpected surges can still lead to temporary restrictions.

How Can You Avoid Triggering Rate Limits?

To avoid triggering rate limits, it's essential to adopt best practices for resource usage. For developers, this includes implementing exponential backoff strategies, which gradually increase the delay between retries after encountering a rate limit. Additionally, caching frequently accessed data can reduce the number of requests sent to the server.

For website owners, optimizing your site to handle traffic spikes can help prevent rate limiting. This might involve using content delivery networks (CDNs) to distribute traffic or implementing load balancing to distribute requests across multiple servers. Monitoring tools can also provide valuable insights into traffic patterns, helping you identify potential bottlenecks before they become issues.

How Can You Identify Rate Limiting Errors?

Recognizing rate limiting errors is the first step toward resolving them. As mentioned earlier, HTTP status codes like 429 (Too Many Requests) are a clear indicator that you've hit a rate limit. These codes are accompanied by headers that provide additional information, such as the remaining number of requests you can make and the time until the limit resets.

Some services also provide custom error messages or logs to help users understand the issue. For example, an API might return a JSON response with details about the rate limit, including the maximum allowed requests and the current usage. These insights can guide you in adjusting your request patterns to stay within the allowed limits.

Monitoring tools and dashboards can further assist in identifying rate limiting errors. Many platforms offer real-time analytics that highlight unusual activity or spikes in traffic, allowing you to take corrective action before the issue escalates. By staying vigilant and proactive, you can minimize the impact of rate limiting on your operations.

What Tools Can Help You Monitor Rate Limits?

Several tools are available to help you monitor and manage rate limits effectively. For developers, API testing tools like Postman and Swagger can simulate request patterns and identify potential bottlenecks. These tools often include features for tracking usage metrics and visualizing rate limit data.

For website owners, analytics platforms like Google Analytics and New Relic provide insights into traffic patterns and server performance. These tools can alert you to unusual spikes in traffic or errors related to rate limiting, enabling you to address the issue promptly. Additionally, cloud service providers like AWS and Azure offer built-in monitoring solutions to help you manage resource usage.

What Are the Best Practices to Avoid Rate Limiting?

Adopting best practices can significantly reduce the likelihood of encountering rate limiting issues. For developers, one of the most effective strategies is to implement request throttling. This involves spacing out your requests over time to stay within the allowed limits. Using libraries or frameworks that handle throttling automatically can simplify this process.

Another best practice is to optimize your code to minimize unnecessary requests. For example, caching responses from an API can reduce the number of calls you need to make. Similarly, batching multiple requests into a single call can help you stay within rate limits while improving efficiency.

For website owners, scaling your infrastructure to handle increased traffic is crucial. This might involve upgrading your hosting plan, using a CDN to distribute traffic, or implementing load balancing to distribute requests across multiple servers. Regularly monitoring your traffic patterns and usage metrics can also help you anticipate and address potential issues before they arise.

How Can You Resolve Rate Limiting Issues?

If you've already encountered rate limiting, there are several steps you can take to resolve the issue. The first and most straightforward approach is to wait for the rate limit to reset. Most services provide information about when the limit will lift, allowing you to plan accordingly. During this time, you can review your usage patterns and identify areas for improvement.

Another option is to contact the service provider for assistance. Many providers offer support channels where you can request an increase in your rate limit or seek guidance on optimizing your usage. Be prepared to provide details about your use case and justify the need for a higher limit.

Finally, consider implementing fallback mechanisms to handle rate limiting gracefully. For example, you can design your application to queue requests and process them once the limit resets. This ensures that your system remains functional even during periods of restriction.

What Should You Do If Rate Limiting Persists?

If rate limiting persists despite your efforts, it may be time to revisit your overall strategy. Start by analyzing your usage patterns to identify any inefficiencies or areas for improvement. This might involve revising your code, optimizing your infrastructure, or exploring alternative services with more flexible rate limits.

Additionally, consider diversifying your resource usage by leveraging multiple providers. For example, if you're relying on a single API, you might explore complementary services that offer similar functionality. This approach not only reduces your dependence on a single provider but also provides a buffer against rate limiting issues.

Frequently Asked Questions About Rate Limiting

What Does This Resource is Being Rate Limited Mean for My Website?

If your website is being rate limited, it means the server or service you're accessing has restricted your requests. This could affect your site's performance, especially if it relies heavily on external APIs or services. To address this, optimize your resource usage and consider upgrading your hosting plan or infrastructure.

How Can I Check My Current Rate Limit Usage?

Most services provide headers or dashboards that display your current rate limit usage. Look for metrics like "requests remaining" or "time until reset" in the API response. Monitoring tools can also help you track your usage in real time.

Can Rate Limiting Be Disabled or Increased?

While some services allow you to request an increase in your rate limit, it's not always possible to disable it entirely. Contact the service provider to discuss your needs and explore potential solutions. Be prepared to justify your request with evidence of your usage patterns and requirements.

Conclusion

Rate limiting is a necessary mechanism for maintaining the stability and fairness of shared resources. While encountering the message "what does this resource is being rate limited" can be frustrating, understanding its purpose and how to address it can help you navigate these challenges effectively. By adopting best practices, monitoring