Have you ever wondered why certain actions on a website seem restricted or delayed? This phenomenon is often due to rate limiting, a crucial mechanism implemented by websites to manage traffic and maintain performance. Rate limiting is a process that controls the number of requests a user or system can make within a specific time frame. By doing so, websites ensure smooth operation, prevent overloads, and safeguard against malicious activities like brute-force attacks. Understanding what is being rate limited on a website can help users and developers alike navigate and optimize their online experiences.

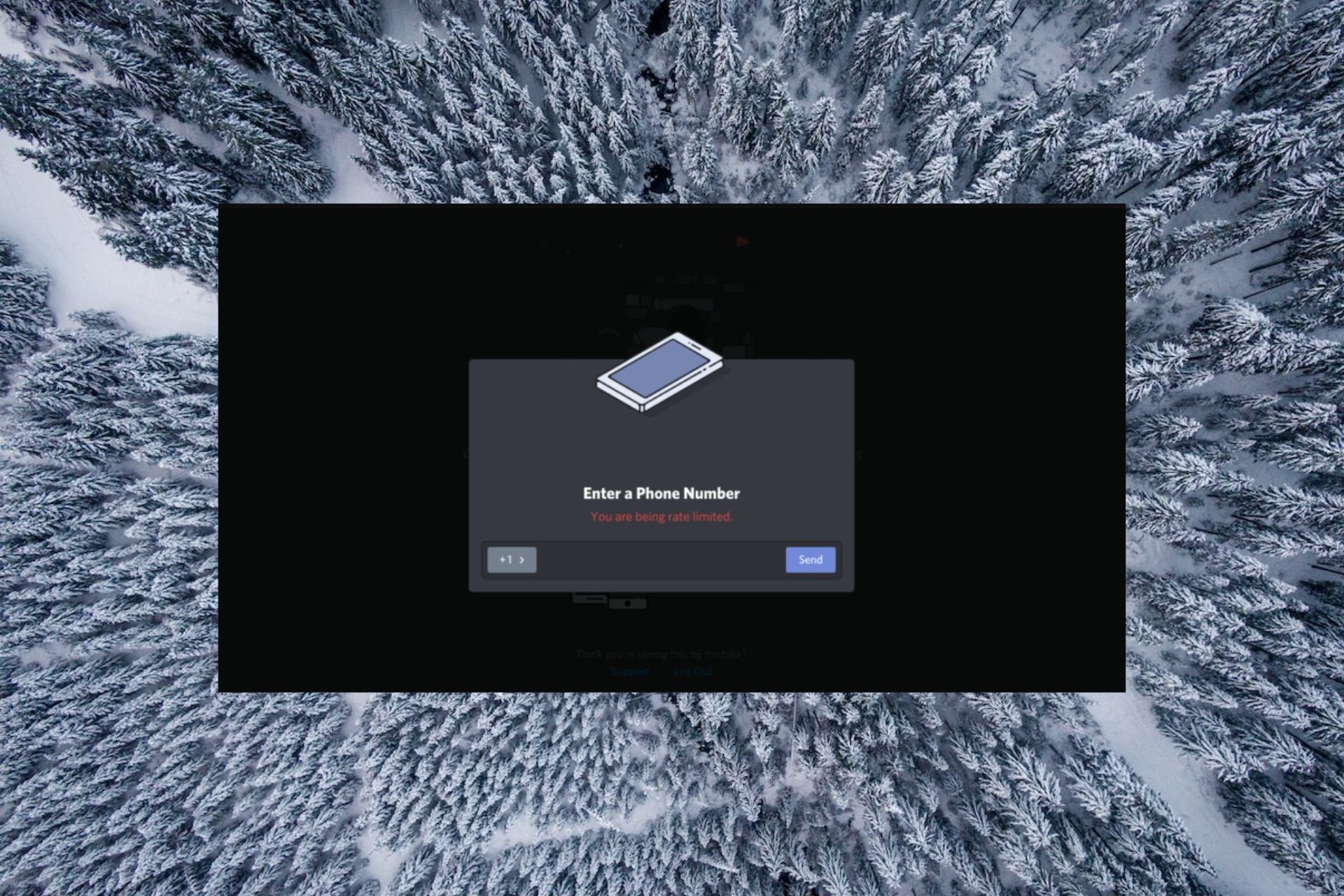

In today’s digital age, where websites and applications are accessed by millions of users simultaneously, rate limiting plays a pivotal role in ensuring fair usage and preventing abuse. From APIs to login attempts, rate limits are applied to various components of a website to protect its infrastructure. For instance, when you repeatedly refresh a webpage or submit forms in quick succession, you might encounter a message like "Too many requests." This is a direct result of rate limiting in action.

While rate limiting might seem like a barrier at times, it serves a greater purpose. It protects both the website and its users by maintaining system stability, reducing downtime, and preventing unauthorized access. Whether you’re a casual user, a developer, or a business owner, understanding the nuances of rate limiting can empower you to make informed decisions. So, what exactly is being rate limited on a website, and how does it affect your online interactions? Let’s dive deeper into this topic.

Read also:Allison Schardin A Comprehensive Guide To Her Life Achievements And Influence

Table of Contents

- What is Rate Limiting?

- Why Are Websites Implementing Rate Limits?

- What Are Common Examples of Rate-Limited Components?

- How Does Rate Limiting Impact Users?

- How Can Developers Optimize for Rate Limits?

- What Are the Drawbacks of Rate Limiting?

- How Can Users Avoid Rate Limiting Issues?

- Frequently Asked Questions

What is Rate Limiting?

Rate limiting is a technique used by websites and applications to control the number of requests a user or system can make within a given time frame. It acts as a safeguard to prevent overuse, abuse, or system overload. For example, if a user sends too many requests in a short period, the server may temporarily block further requests until the limit resets. This ensures that resources are distributed fairly among all users.

Rate limiting is implemented through various algorithms, such as token bucket, leaky bucket, and fixed window counters. Each method has its own approach to managing request rates. For instance, the token bucket algorithm allows a certain number of tokens (requests) to be used at any given time, replenishing them at a steady rate. This ensures that users can make requests without overwhelming the system.

While rate limiting is primarily a backend mechanism, its effects are often visible to users. For example, if you’re using an API and exceed the allowed number of calls, you might receive an HTTP 429 error, indicating "Too Many Requests." This is a clear sign that rate limiting is in place. Understanding these mechanisms helps users and developers work within the constraints and avoid disruptions.

Why Are Websites Implementing Rate Limits?

Websites implement rate limits for several reasons, all of which revolve around maintaining system integrity and enhancing user experience. One of the primary goals is to prevent abuse and malicious activities. For instance, attackers may attempt to brute-force passwords or scrape data by sending an overwhelming number of requests. Rate limiting mitigates these risks by restricting the number of attempts a user can make in a specific timeframe.

Another reason for implementing rate limits is to ensure fair usage. Without rate limiting, a single user or system could monopolize server resources, leaving others unable to access the service. This is particularly important for APIs, where developers rely on consistent performance to build applications. By setting limits, websites can distribute resources evenly and maintain a high-quality experience for all users.

Additionally, rate limiting helps reduce server load and prevent downtime. When a website receives too many requests simultaneously, it can become overwhelmed, leading to crashes or slow performance. By controlling the flow of requests, rate limiting ensures that the server operates within its capacity, providing a stable and reliable service for everyone.

Read also:The Diet Plan That Changed My Life In Just Three Weeks A Transformation Story

What Are Common Examples of Rate-Limited Components?

API Rate Limiting

APIs are one of the most common areas where rate limiting is applied. Developers use APIs to interact with a website’s backend services, such as retrieving data or performing actions. However, excessive API calls can strain the server, leading to performance issues. To prevent this, websites impose rate limits on API usage.

For example, popular services like Twitter and GitHub implement API rate limits to ensure fair usage. Twitter allows a certain number of requests per 15-minute window, while GitHub enforces limits based on the type of account (free or paid). These limits help maintain system stability and prevent abuse by malicious actors.

Login Attempts and Security

Another critical area where rate limiting is applied is login attempts. Websites often limit the number of failed login attempts to protect against brute-force attacks. For instance, after three to five failed attempts, a user may be temporarily locked out or required to complete a CAPTCHA challenge.

This measure not only safeguards user accounts but also enhances overall security. By restricting the number of attempts, websites can prevent attackers from guessing passwords or exploiting vulnerabilities. Additionally, rate limiting login attempts reduces the risk of account takeovers, ensuring that users’ data remains safe.

How Does Rate Limiting Impact Users?

While rate limiting is essential for maintaining system stability, it can sometimes impact users negatively. For instance, if a user exceeds the allowed number of requests, they may encounter errors or delays. This can be frustrating, especially for those who rely on APIs or frequently interact with a website’s features.

However, the impact of rate limiting can be minimized by understanding its purpose and working within the set limits. For example, users can space out their requests or optimize their interactions to stay within the allowed thresholds. Additionally, websites often provide clear error messages and guidelines to help users navigate rate limits effectively.

On the positive side, rate limiting ensures a fair and consistent experience for all users. By preventing abuse and overuse, it helps maintain system performance and reliability. This benefits everyone, as users can access the website or service without interruptions or slowdowns.

How Can Developers Optimize for Rate Limits?

Best Practices for API Usage

Developers can optimize their applications by adhering to best practices for API usage. One of the most effective strategies is to cache responses whenever possible. By storing frequently accessed data locally, developers can reduce the number of API calls and stay within rate limits.

Another approach is to implement exponential backoff algorithms. When an API call fails due to rate limiting, the application can retry the request after a short delay, gradually increasing the wait time with each subsequent attempt. This ensures that the system recovers gracefully without overwhelming the server.

Handling Rate Limit Errors

Handling rate limit errors effectively is crucial for maintaining a seamless user experience. Developers should design their applications to detect and respond to HTTP 429 errors, which indicate that the rate limit has been exceeded. For example, the application can display a friendly message to the user, explaining the situation and suggesting actions to resolve it.

Additionally, developers can monitor API usage and adjust their strategies accordingly. By analyzing usage patterns, they can identify potential bottlenecks and optimize their code to minimize the risk of hitting rate limits. This proactive approach helps ensure that the application remains functional and reliable.

What Are the Drawbacks of Rate Limiting?

While rate limiting offers numerous benefits, it also has some drawbacks that need to be considered. One of the main challenges is the potential for false positives. Legitimate users or systems may inadvertently exceed rate limits, leading to disruptions in service. For example, a sudden spike in traffic from a single user could trigger rate limiting, even if the activity is legitimate.

Another drawback is the complexity it adds to development and user experience. Developers need to account for rate limits when designing applications, which can increase development time and effort. Similarly, users may find rate limiting frustrating if they encounter errors or delays, especially if they are unaware of the underlying reasons.

Despite these challenges, the benefits of rate limiting outweigh the drawbacks in most cases. By implementing rate limits thoughtfully and providing clear communication, websites can minimize the negative impact while maximizing the advantages.

How Can Users Avoid Rate Limiting Issues?

Users can take several steps to avoid rate limiting issues and ensure a smooth online experience. One of the simplest strategies is to space out their interactions with a website. For example, instead of submitting multiple forms or refreshing a page repeatedly, users can wait a few seconds between actions.

Another helpful tip is to monitor error messages and follow the website’s guidelines. If a user encounters a rate limit error, they should read the message carefully and adjust their behavior accordingly. For instance, if the error suggests waiting for a specific period, the user can comply to avoid further disruptions.

Additionally, users can optimize their browsing habits by using tools like browser extensions or caching mechanisms. These tools can reduce the number of requests sent to the server, helping users stay within the allowed limits. By adopting these practices, users can minimize the risk of encountering rate limiting issues.

Frequently Asked Questions

What is being rate limited on a website?

Rate limiting on a website typically applies to components like APIs, login attempts, form submissions, and other actions that involve server requests. It ensures fair usage and prevents abuse.

How can I check if a website has rate limits?

Most websites provide documentation or guidelines about their rate limits, especially for APIs. You can also monitor error messages like HTTP 429 to identify rate-limiting behavior.

What should I do if I hit a rate limit?

If you hit a rate limit, wait for the specified time before retrying your request. Alternatively, optimize your interactions to stay within the allowed thresholds and avoid exceeding the limit in the future.

Conclusion

Rate limiting is a vital mechanism that ensures the stability, security, and fairness of websites and applications. By understanding what is being rate limited on a website, users and developers can navigate these constraints effectively and optimize their online experiences. Whether you’re a casual user or a seasoned developer, adhering to rate limits and implementing best practices can help you avoid disruptions and enhance your interactions with digital platforms.

As websites continue to evolve and handle increasing amounts of traffic, rate limiting will remain a cornerstone of their operations. By embracing this technology and working within its boundaries, we can all contribute to a more stable and secure digital ecosystem.

For further reading on this topic, you can explore this external resource to gain deeper insights into the technical aspects of rate limiting.